|

I am an Ph.D. student at University of Michigan, Ann Arbor, supervised by Prof. Qing Qu. Previously, I interned in Snap Inc. as a research intern, supervised by Ivan Skorokhodov, Aliaksandr Siarohin and Sergey Tulyakov. I obtained my Bachelor's degree in Mechanical Engineering from Huazhong university of Science and Technology, advised by Prof. Zhigang Wu; Master's degree in Mechanical Engineering and Electrical and Computer Engineering from University of Michigan, Ann Arbor, advised by Prof. Chad Jenkins. My research centers on generative models, with a particular focus on developing a rigorous theoretical understanding of diffusion models and leveraging these insights to drive practical advancements. I study their generalization behavior, interpretability, and underlying low-dimensional structures, and use these theoretical insights to enhance the efficiency, controllability, and safety of diffusion-based generation. [Updated in 10/2025] |

|

|

[01/2026] Our work on AlphaFlow was accepted by ICLR 2026! [09/2025] Our work on Shallow Diffuse was accepted by NeurIPS 2025 and selected as Spotlight! [09/2025] Our work on Model Collapse from MtoG prespective was accepted by NeurIPS 2025 and selected as Spotlight! [06/2025] I joined Snap Inc. as a research intern in the Creative Vision Team! |

|

|

|

Huijie Zhang, Aliaksandr Siarohin, Willi Menapace, Michael Vasilkovsky, Sergey Tulyakov, Qing Qu, Ivan Skorokhodov ICLR, 2026 ArXiv /Code In this work, we propose α-Flow, a broad family of objectives that unifies flow matching, Shortcut Model, and MeanFlow under one formulation. By adopting a curriculum strategy that smoothly anneals from trajectory flow matching to MeanFlow, our largest α-Flow-XL/2+ model achieves new state-of-the-art results using vanilla DiT backbones, with FID scores of 2.58 (1-NFE) and 2.15 (2-NFE). |

|

Huijie Zhang, Zijian Huang, Siyi Chen, Jinfan Zhou, Zekai Zhang, Peng Wang, Qing Qu ArXiv /Website In this work, we propose probability flow distance (PFD), a theoretically grounded and computationally efficient metric to measure distributional generalization. by using PFD under a teacher-student evaluation protocol, we empirically uncover several key novel generalization behaviors in diffusion models. |

|

Wenda Li*, Huijie Zhang*, Qing Qu NeurIPS, 2025 (Spotlight, top 3.2%) ArXiv /Code /Website In this work, we propose Shallow Diffuse, utilizing the low-dimensional subsapce in diffusion model to disentangle the watermarking and image generation process. Shallow Diffuse is both emprirically and theoretically demonstrated its robustness and consistency, outperform previous watermarking techniques. |

|

Lianghe Shi*, Meng Wu*, Huijie Zhang, Zekai Zhang, Molei Tao, Qing Qu NeurIPS, 2025 (Spotlight, top 3.2%) ArXiv /Code /Website In this work, we propose an entropy-based data selection strategy to mitigate model collapse in diffusion models. By identifying the transition from generalization to memorization, driven by declining entropy in synthetic training data, our method effectively prevents performance degradation. Empirical results demonstrate that our approach preserves diversity and visual quality in recursive generation, outperforming prior techniques. |

|

Peng Wang*, Huijie Zhang*, Zekai Zhang, Siyi Chen, Yi Ma, Qing Qu ArXiv /Code /Website In this work, we provide theoretical insights into the connection between diffusion model and subspace clustering. The connection shed light into the transition of diffusion model from memorization to generalization and the mechanism it breaks the curse of dimensionality. |

|

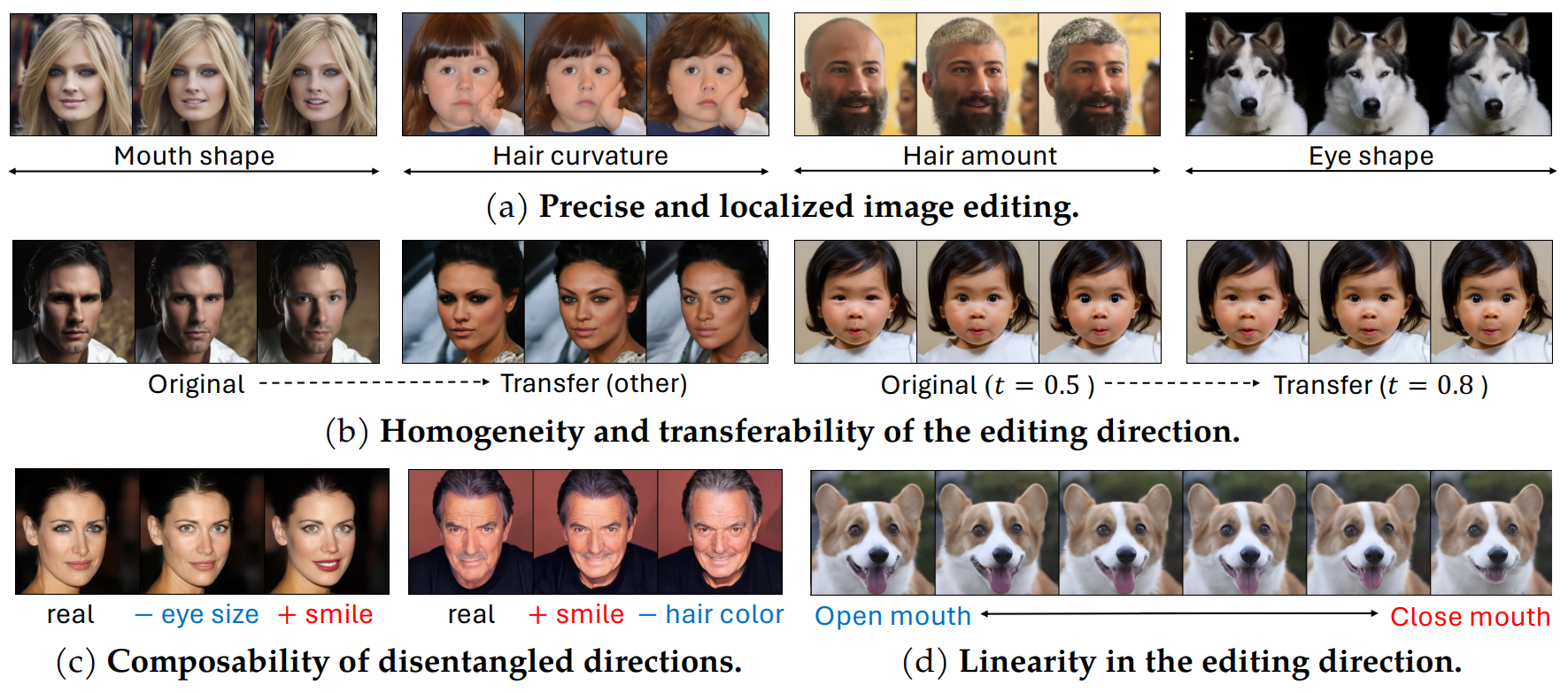

Siyi Chen* Huijie Zhang*, Minzhe Guo, Yifu Lu, Peng Wang, Qing Qu NeurIPS, 2024 ArXiv /Code /Website We improve the understanding of the semantic space in diffusion model and propose LOCO Edit, an editing method achieving precise and disentangled image editing without additional training. The proposed method is also supported by theoretical justification and has nice properties: homogeneity, transferability, composability, and linearity. |

|

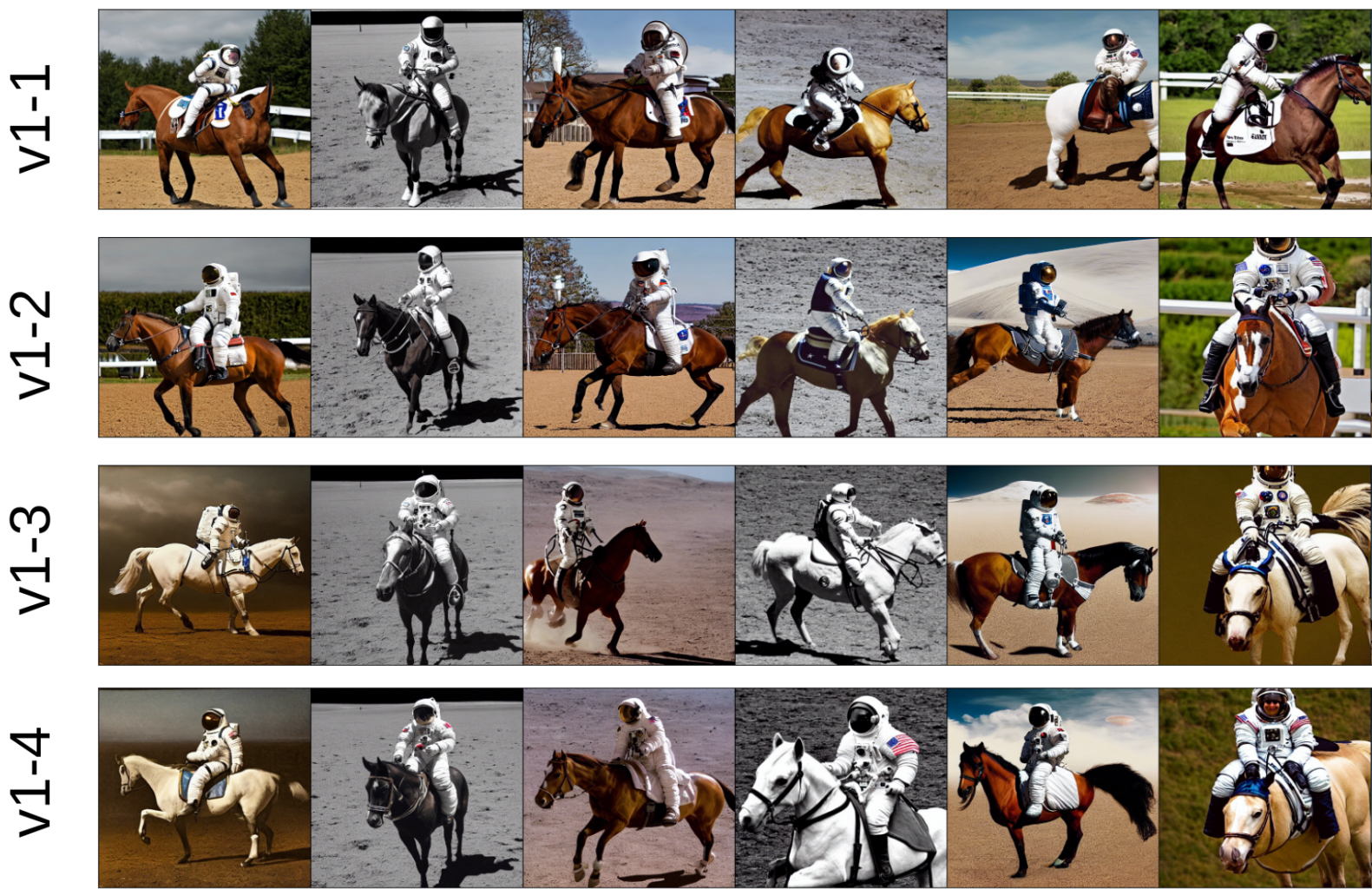

Huijie Zhang*, Jinfan Zhou*, Yifu Lu, Minzhe Guo, Peng Wang, Liyue Shen, Qing Qu NeurIPS Workshop, 2023 (best paper award); ICML, 2024 ArXiv /News /Talk /Code /Website We investigate an intriguing and prevalent phenomenon of diffusion models: given the same starting noise input and a deterministic sampler, different diffusion models often yield remarkably similar outputs. And reveal its relationship with diffusion model generalizability. |

|

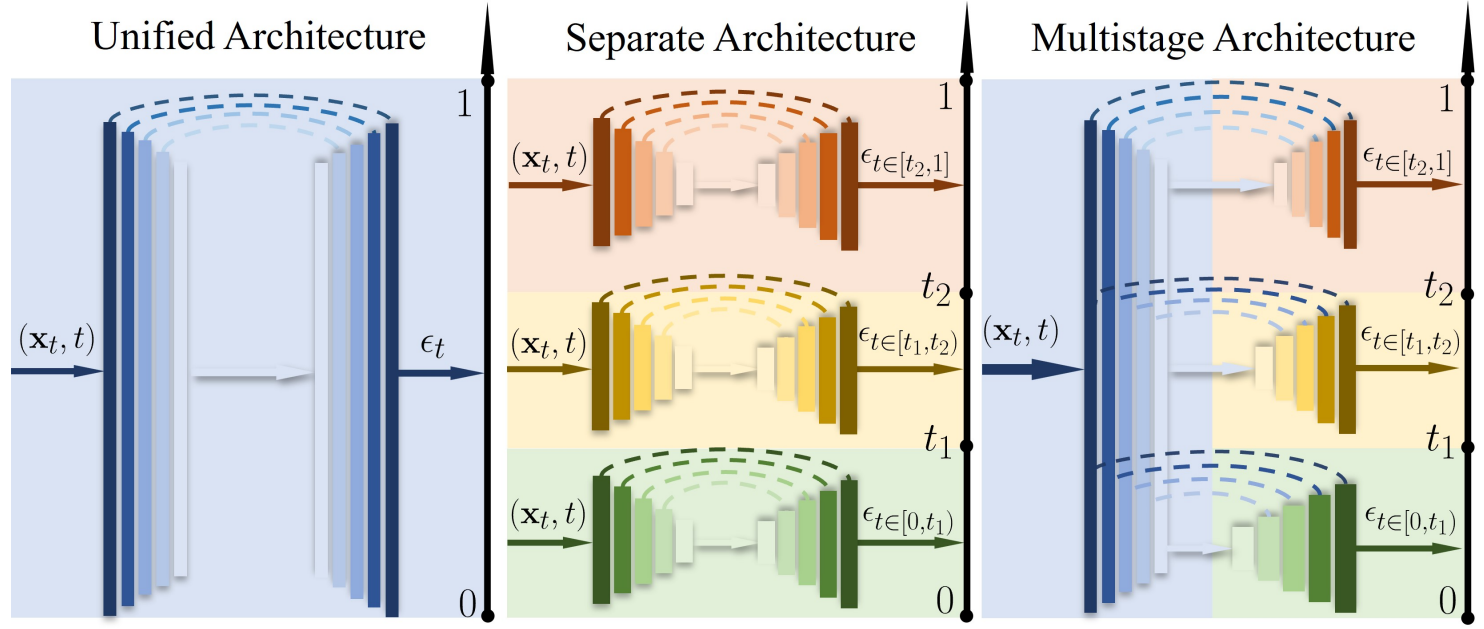

Huijie Zhang*, Yifu Lu*, Ismail Alkhouri, Saiprasad Ravishankar, Dogyoon Song, Qing Qu CVPR, 2024 ArXiv /Website /Github /Talk In this study, we significantly enhance the training and sampling efficiency of diffusion models through a novel multi-stage framework. This method divides the time interval into several stages, using a specialized multi-decoder U-net architecture that combines time-specific models with a common encoder for all stages. |

|

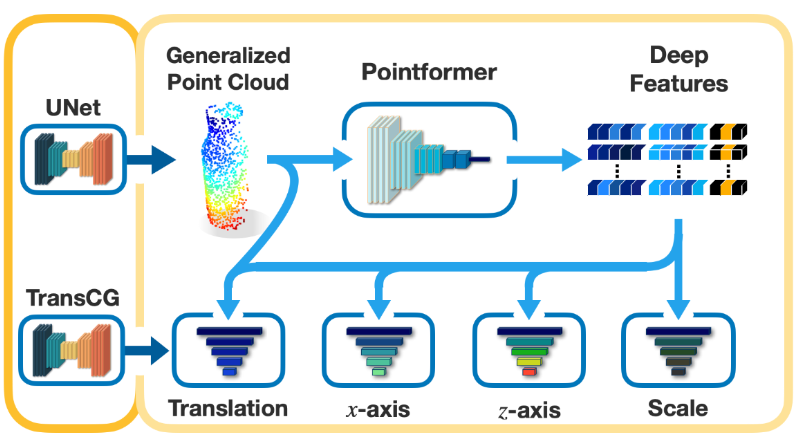

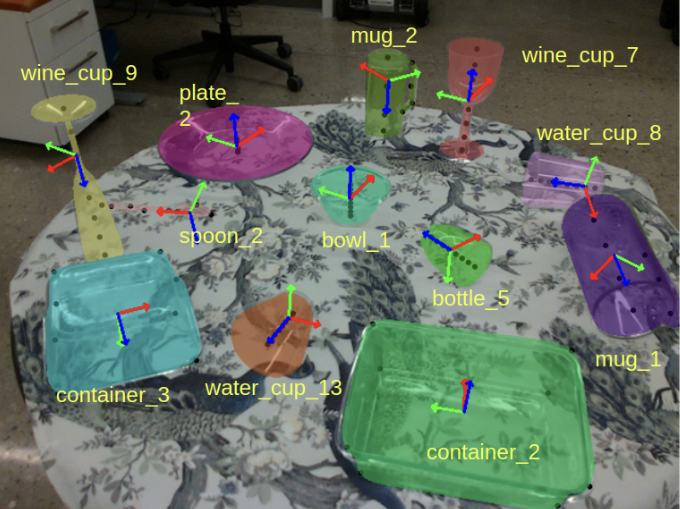

Huijie Zhang, Anthony Opipari, Xiaotong Chen, Jiyue Zhu, Zeren Yu, Odest Chadwicke Jenkins, ECCV Workshop, 2022 ArXiv /Website We proposed TransNet, a two-stage pipeline that learns to estimate category-level transparent object pose using localized depth completion and surface normal estimation. |

|

Xiaotong Chen, Huijie Zhang, Zeren Yu, Anthony Opipari, Odest Chadwicke Jenkins, ECCV, 2022 ArXiv /Github /Website We collected a large-scale transparent object dataset with RGB-D and annotated poses. And we benchmarked transparent object depth completion and poes estimation on this dataset. |

|

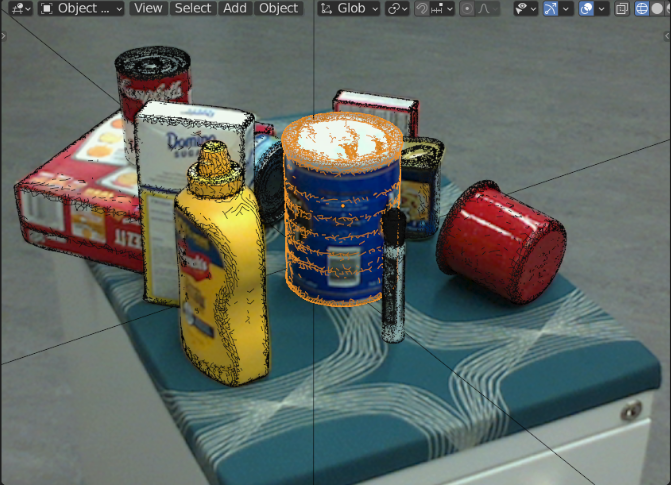

Xiaotong Chen, Huijie Zhang, Zeren Yu, Stanley Lewis, Odest Chadwicke Jenkins, IROS, 2022 ArXiv /Github /Website ProgressLabeller is an efficient 6D pose annotation method. It is also the first open source tools compatible with transparent object. It was implemented as a blender Add-on, more user-friendly for using. |

|

|