Diffusion models have emerged as a powerful class of generative models, capable of producing high-quality samples that generalize beyond the training data.

However, evaluating this generalization remains challenging: theoretical metrics are often impractical for high-dimensional data, while no practical metrics rigorously measure generalization.

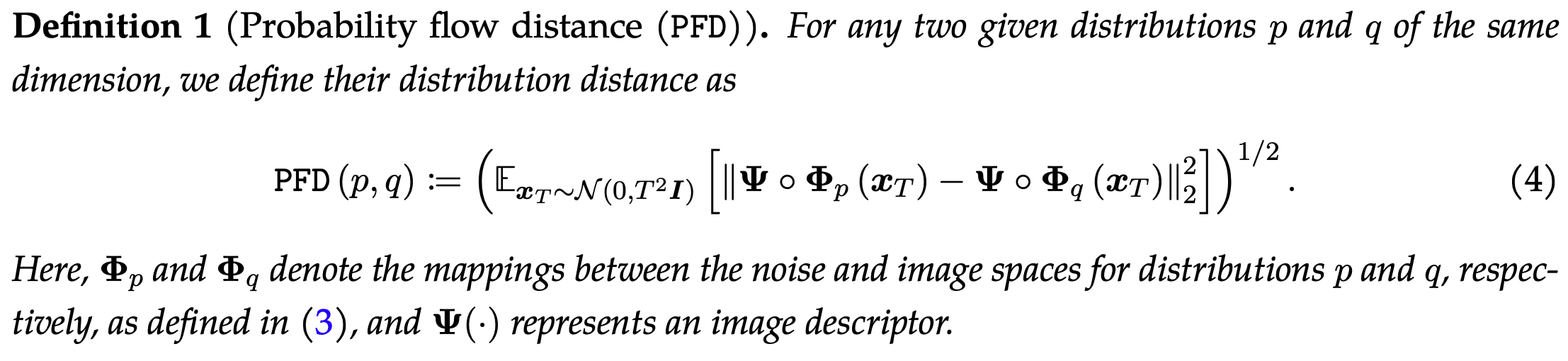

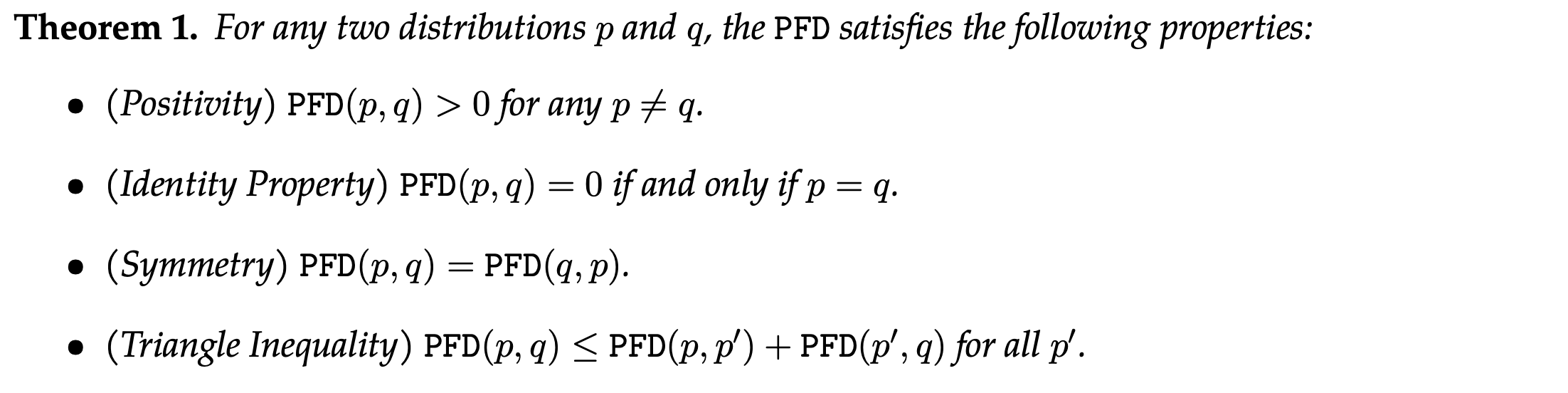

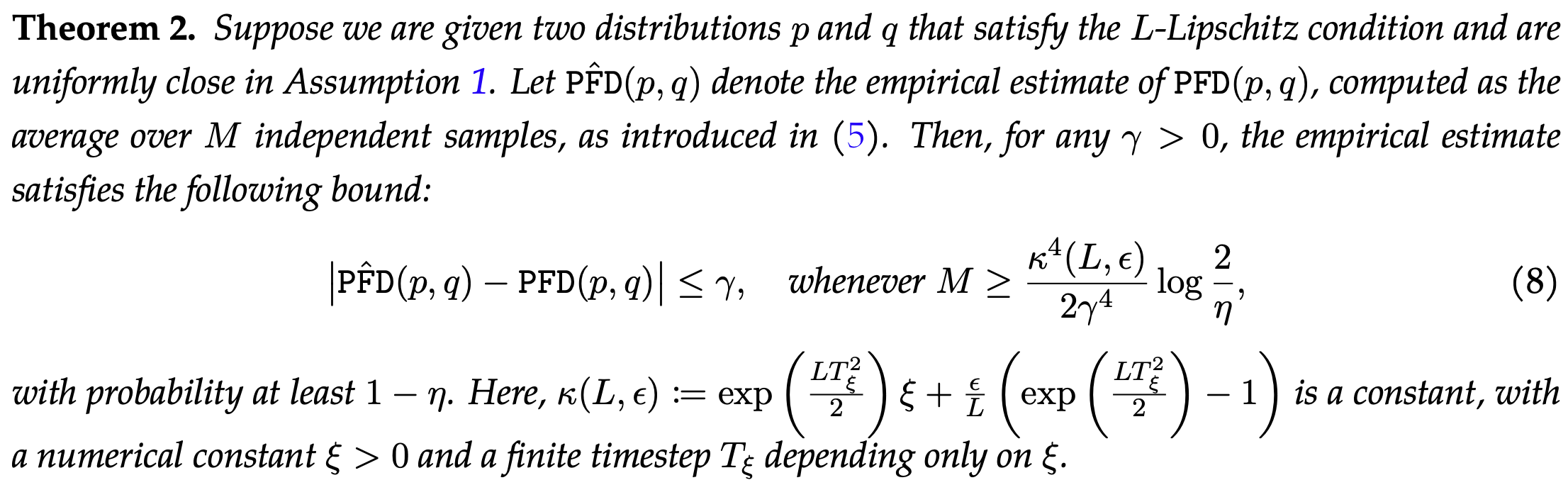

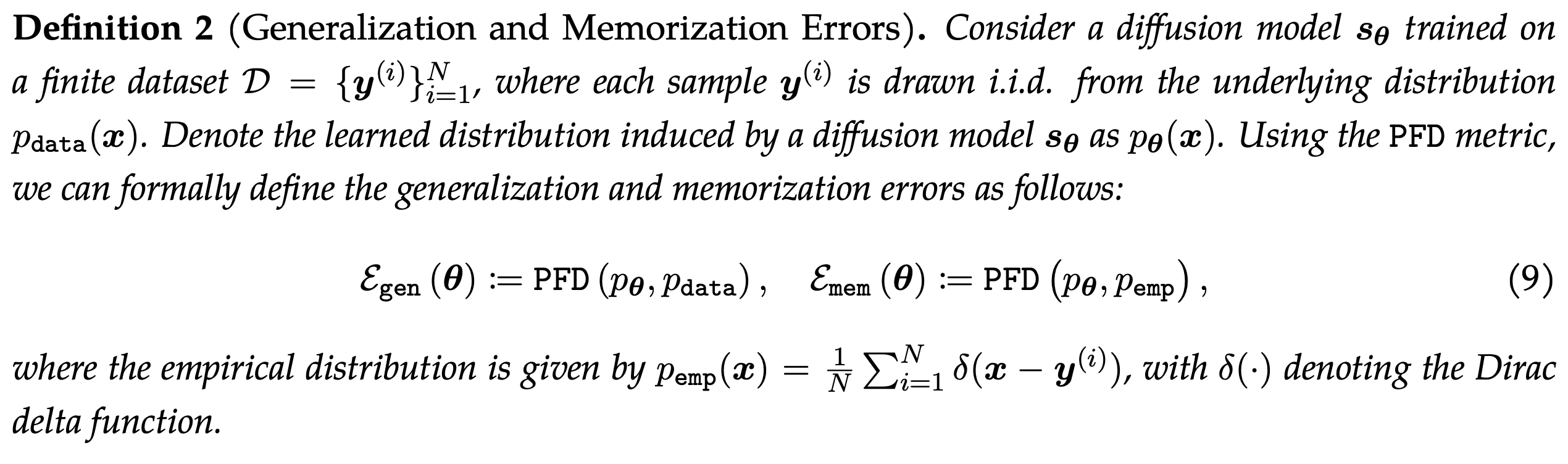

In this work, we bridge this gap by introducing probability flow distance (PFD), a theoretically grounded and computationally efficient metric to measure distributional generalization. Specifically, PFD quantifies the distance between distributions by comparing their noise-to-data mappings induced by the probability flow ODE.

Moreover, by using PFD under a teacher-student evaluation protocol, we empirically uncover several key generalization behaviors in diffusion models, including:

- Scaling behavior from memorization to generalization.

- Early learning and double descent training dynamics.

- Bias-variance decomposition.

Beyond these insights, our work lays a foundation for future empirical and theoretical studies on generalization in diffusion models.